So I tried out this whole vibe engineering thing for 8 hrs. Here’s the tale.

If you haven’t heard the term, vibe engineering is basically letting AI do a lot of the heavy lifting in prototyping or idea shaping. You talk to it, give it some instructions, let it go off and build something semi-coherent, and keep refining. It’s fast. It’s wild. It’s like having a caffeinated intern who doesn’t sleep and has a mild disregard for boundaries.

But here’s the thing: if you want anything more than a one-off script, you have to slow down and think differently. Especially if you’re a Python scripter like me.

My Use Case

I wanted realistic interview transcripts for my target group. My intended data flow was 6 steps:

provide a venture idea

generate a promising target group (GPT 4.0)

for this group, generate sub-groups w/ prevalence weights (GPT 4.0)

generate a weighted pool of realistic user profiles (GPT 4.0 + Reddit crawler)

generate interview question set for this target group

for each profile, generate a realistic 1:1 interview transcript

A note on the profiles: I wanted to seed a pool of fake-but-realistic research subjects, with names, titles, locations, personal quirks, strengths, weaknesses, preferred tools, hated tools — the “complete picture”. Sort of like RPG character sheets for customer discovery.

Confession: this all started as a way to get around my homework for Idea Factory Cohort 11, which was to identify and interview target customers within my opportunity space. I knew the genius behind the program, Luke Rabin (who emphasizes that a real life conversations with customers are a magic ingredient to long-term start-up success) would NOT appreciate me attempting to skirt hard work with AI. On top of that, my programmer/tech friends would also judge me hard for the vibe engineering session itself. Anyways, I absolve myself; I am nothing if not imperfect.

In-depth narrative

The first attempt was pretty dismal. On one hand, I didn’t use conversational context, which baked in by default something Luke always says: “As much as possible, leave your start-up idea outside of the interview session.” When asking for interview profiles, the AI has no way of attempting to generate them in the context of my start-up idea; rather, they would be generated with no start-up bias.

On the other hand, my explicit isolated commands to “be as realistic as possible, such that the generated profile is a sampled randomly and realistically, is as if it were picked out of a bucket of real life individuals from this target.” Instead, it basically gave me 10 boring copies of the same watered down, averaged out, stereotpyical (with a thin veneer of political correctedness). In this case:

- 7 of 10 were named Samantha or Sarah.

- 5 of 10 were between age 34 and 40 (and 10 of 10 between 25 and 48).

- 7 of 10 were located in Austin, TX.

- 7 of 10 had 8 years of work experience.

I was able to improve these metrics by tweaking the prompt, but it revealed a fundamental bias of the AI to generate popular, non-unique, generalized content. My last attempt made me sound like a desperate Hollywood director talking to his actors on set who can’t seem to play their part authentically:

“…Feel the rawness of each individual. Do not be afraid of their incomplete and messy life story. Do not be afraid to “accidentally” slip in subtle, revealing, and possibly even anomalous details that might reveal larger arcs in the persons life story, because that’s what you’d get if you were interviewing a a real person were pulling the name of a real person from a hat representing your target group…”

Anyways, I got fed up and carved this specific part of my app – I’ll call it “subject generation” – into it’s own module that I could plug into different iterations for testing. Specifically, a Cohort 11 peer had mentioned using Reddit to find target group interviewees, so I tested out OpenAI’s new ChatGPT 4.5 “research preview” model and gave it the following robust research prompt:

You are a deep research assistant tasked with creating realistic bios of real people based on Reddit activity. Follow these steps carefully:

Input: You will be provided with a target group and sub-group. Example: “Target group: First-time dads in their 30s. Sub-group: Stay-at-home dads navigating career transitions.”

Scour Reddit using search operators and forum awareness to find real posts and comment threads where individuals match this group. Prioritize subreddits where this group is likely to share honest, personal stories (e.g., r/daddit, r/stayathomedads, r/careerguidance, r/parenting, r/askmenover30).

Select 3–5 Reddit usernames whose posts or comment history suggest they authentically belong to this group. Extract:

- Notable quotes or anecdotes

- Writing style, tone, and emotional state

- Life facts shared (e.g. age, kids, location, profession, struggles)

- Comment karma or post engagement that signals credibility

For each selected user, write a short bio (120–180 words) that:

- Stays rooted in the facts they posted

- Makes reasonable inferences to fill in gaps without overreaching

- Captures their vibe—humor, weariness, optimism, self-deprecation, etc.

- Feels like something they’d write in a casual “about me” section

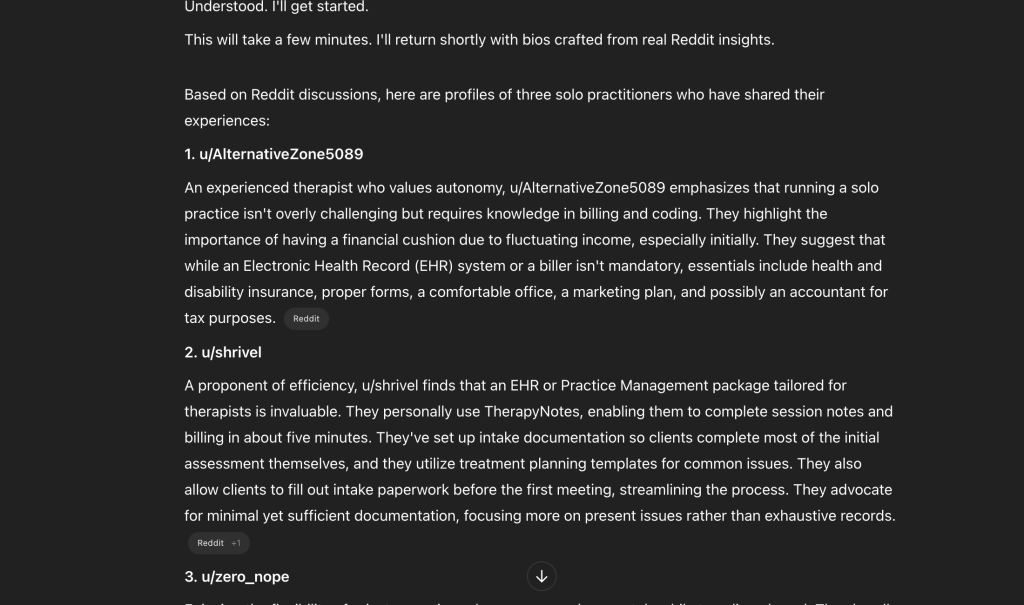

Important: The bios must not idealize, exaggerate, or sanitize. Real people have jagged edges—your job is to understand, not polish. Think like a documentarian with a literary ear, and leave no provided fact out of the picture (only adding where you see appropriate).Ignoring for a moment this fascinating Medium post saying not even Reddit can be trusted for authentic representations of reality, I was impressed with my results with GPT 4.5 which are in the screenshot below if you’re curious (in this case, my target group was specifically solo practitioners in the healthcare space, with links leading back to actual Reddit commentary):

These felt like a stark improvement on my previous iteration, but the market does not currently offer any kind of “research GPT” API that does the sort of internet-crawling used by GPT 4.5. This makes it a non-starter for my app. Side note: someone tell why this API is not available anywhere, despite it being exposed via chat window (with request limits) by Gemini, ChatGPT, and Grok for free/$20?

Anyways, I resolved to write my own Reddit crawler with praw (assisted by AI to generate search query strings) for a v2 of this app, or just stubbing in a single LLM call whenever deep research becomes available via API. Leave a comment if you know of a good example for this.

While I originally wanted to speed ahead and generate full interviews, at this point I’m stopping to write my crawler. I’ll update this post when I get a working version.

Some Hard-Learned Lessons (so far)

1. Start with the data model.

LLMs speak in guesses and don’t instinctually take to data models. You ask it to solve a problem, and it spits out a random mixed bag of logic, prompt strings, and hardcoded hacks all mashed together. Fine for a demo. Terrible for anything you want to maintain or extend.

So I flipped the approach: I defined the data model first, described it clearly to the LLM, and asked it to help me generate a models.py file of clean Python @dataclass definitions. I also wrote a little module to parse JSON for LLM text response with a few try/catches to attempt to remedy the error with last resort to try the call again with extra emphasis on needing JSON output.

2. Break out utils & prompts.

Even with a good data model, the LLM loves putting prompt strings and utility functions right inside your main logic. I had to fight it constantly to pull those out into separate modules and eventually stopped and did it myself. My recommendation: treat your prompts like config — keep them off to the side, versioned, tweakable – and ASAP break out utils like file loading/writing, templating, LLM-wrapping, & data modeling.

3. Output staging is underrated

I started writing the outputs of each step to .txt or sometimes .json files in an outputs/ directory. Simple, but powerful. It let me inspect results between stages, run diffs, tweak inputs, and keep everything modular.

4. The AI doesn’t track your evolving codebase.

Once you start editing, reorganizing, or renaming files, it loses context fast. So don’t treat it like a collaborator. It’s more like a code snippet oracle. Ask good questions, get good snippets, and stitch things together yourself.

The Good Stuff

Despite all that, this was the most fun I’ve had coding in a while.

I burned 8 hours straight without even needing caffeine. Normally I’d be dragging around noon but this felt like play and I had to make my self stop in the afternoon to get the kids. Once I had the data model and a clean mental map, I was able to iterate fast, build things that felt real, and experiment with how to simulate authentic, useful customer data.

It still feels like I skipped a step or 2.

Final Take

AI will happily give you the fastest route to the wrong solution and make it look semi-convincing. It doesn’t really teach you how to do it right. Not yet.

But if you treat it like a tool, slow down, think through your data structures, and give it a little architectural scaffolding… it’s powerful. Especially for hack days or creative prototyping.

Just don’t expect it to replace the part where you actually understand what you’re building. That’s still on you, dawg!